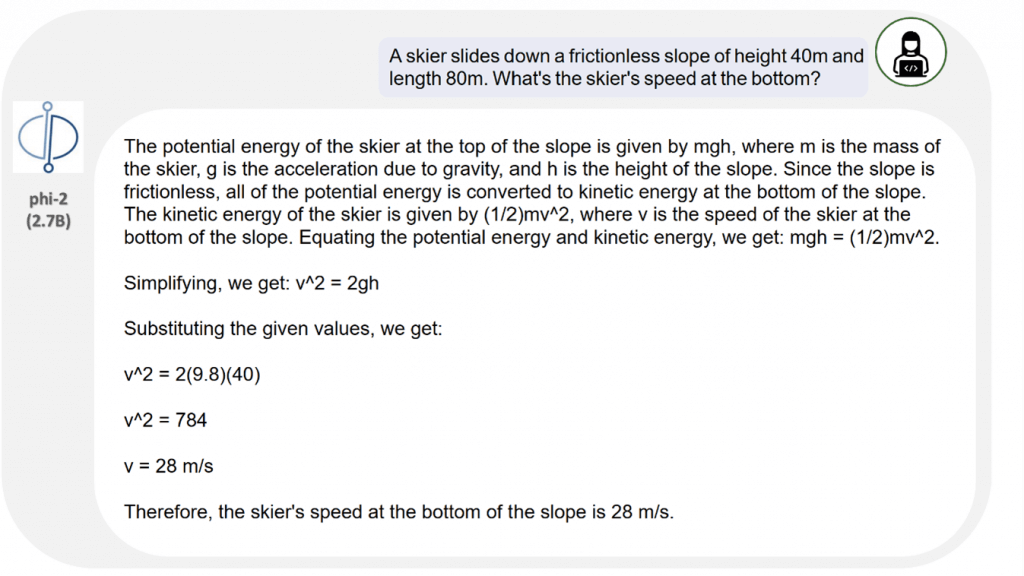

Microsoft has recently unveiled phi-2, a revolutionary language model that stands out as one of the smallest yet most powerful in the world. Boasting an impressive 2.7 billion parameters, phi-2 surpasses larger competitors such as Google’s Gemini Nano2 and Meta Llama 27b. In this blog post, we will delve into the technical innovations of phi-2, its practical applications, and the potential impact it could have on the future of AI.

Phi-2 represents the latest iteration in Microsoft’s phi series, building upon the success of its predecessors, phi-1 and phi-1.5. While phi-1, released in June 2023 with 1 billion parameters, demonstrated the ability to generate coherent text in multiple languages using the vast Common Crawl dataset, phi-1.5, launched in September 2023, further enhanced its capabilities with an expanded dataset named Web text plus, encompassing content from social media, books, and Wikipedia.

The primary objective of phi-2 is to surpass its predecessors, excelling in both size and performance. Notably, it distinguishes itself in two key aspects. Firstly, it possesses the unique ability to generate realistic images based on text descriptions, a feature unparalleled in small language models. Secondly, it enhances its capabilities by learning from diverse sources, including books, Wikipedia, code, and scientific papers, setting it apart from competitors. Despite having only half the parameters of models like Llama 2 and Mixtral, phi-2 outperforms them in benchmarks.

Llama 27b, Mixtral, and Gemini Nano 2, all equipped with a 7-billion-crawl dataset, are surpassed by phi-2, scoring 0.95 on the top-performing scales, compared to L2’s 0.9 and Gemini Nano 2’s 0.8. Phi-1, with a billion parameters from Common Crawl, lacks a specified top-performing score. Notably, phi 1.5 incorporates knowledge transfer, though details are unspecified, and phi-2 outshines its predecessors, showcasing superior compactness, efficiency, and adaptability.

what makes phi-2 special

Phi-2’s standout features are attributed to key technical advances. Its unique working mechanism involves text-to-image synthesis, enabling the generation of lifelike images from textual descriptions. Employing a cutting-edge training method called textual knowledge transfer, phi-2 excels in learning and tackling a diverse range of tasks. By assimilating information from various sources, it gains a competitive edge over other small language models.

In essence, Microsoft’s Phi-2 emerges as a groundbreaking language model with the potential to reshape the world. While still in development, it has already demonstrated its capacity to revolutionize human-computer interactions and societal communication. The future holds great promise for Phi-2, and we anticipate its significant impact on the world.