In the realm of artificial intelligence (AI), a recent tweet by Steven Heidel, a member of the OpenAI team, has ignited significant discourse. Heidel’s tweet, “Brace yourself AGI is coming,” was a response to Jan Leike Als’ revelation about a novel approach OpenAI is taking to address the risks associated with advanced AI systems. These powerful systems have the potential to be hazardous if not managed appropriately. Let’s delve into the details of OpenAI’s initiatives, particularly their preparedness framework, designed to ensure the safety and reliability of evolving AI technologies.

OpenAI’s Preparedness Framework: Navigating AI Risks

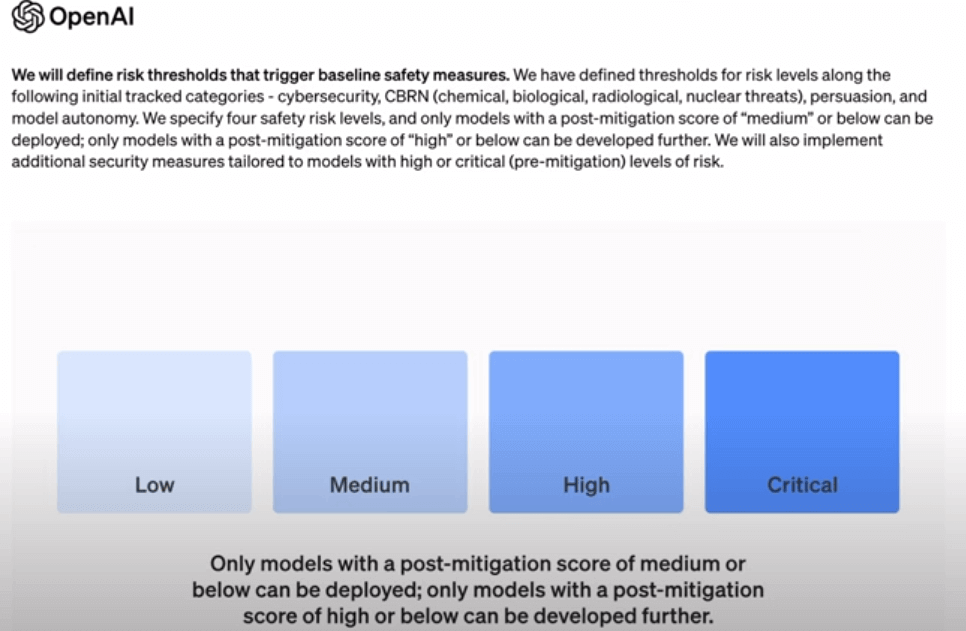

OpenAI is actively developing a preparedness framework, akin to a set of rules or a strategic plan, to safeguard against unforeseen issues as AI continues to advance. The primary goal is to prevent AI technologies from behaving unexpectedly or causing harm. Central to this framework is the assessment of the risks posed by different AI systems. OpenAI envisions a scorecard system that quantifies the potential risks associated with an AI model. If an AI system is deemed too risky, OpenAI may opt not to use it or may modify it to enhance safety.

Vulnerable Areas of AI Impact

Cybersecurity:

One critical concern within the framework is the potential misuse of AI for infiltrating computer systems and causing disruptions. If AI becomes adept at hacking into important systems or stealing sensitive information, it could pose a significant threat.

Chemical, Biological, Radiological, and Nuclear Threats:

OpenAI acknowledges the risk of AI contributing to the creation of dangerous entities such as biological weapons. The framework is designed to prevent the misuse of AI in these areas, ensuring that it does not lead to harmful consequences.

Persuasion:

The framework also addresses the persuasive capabilities of AI, particularly in influencing people’s beliefs or actions, such as during elections. OpenAI aims to curb any potential misuse of AI for manipulative purposes.

GPT-5: OpenAI’s Next Advancement

OpenAI is currently focusing on developing GPT-5, a substantial undertaking that underscores the significance of responsible AI development. Ensuring that GPT-5 is not only powerful but also safe and compliant with established rules is a top priority. OpenAI emphasizes that their preparedness framework is an evolving process, with a dedicated team continuously monitoring and enhancing safety protocols.

The Significance of OpenAI’s Preparedness Framework

source: open-ai

OpenAI’s commitment to AI ethics is evident in its preparedness framework. As the tech world grapples with the increasing importance of ethical considerations in AI development, OpenAI’s strategic approach demonstrates a dedication to creating AI that is not only intelligent but also secure. The framework reflects OpenAI’s recognition of the potential harm that powerful AI systems can cause, emphasizing the need for responsible usage.

In a world where AI capabilities are expanding rapidly, OpenAI’s work on this framework signals a crucial step towards ensuring that AI is harnessed responsibly, avoiding harm and prioritizing the well-being of all. As we embrace the exciting possibilities of AI, OpenAI’s emphasis on ethical development becomes pivotal, reminding us to wield this powerful tool wisely and with caution.